Description

In a sample retrieval scenario, we aim to have a mobile robot capable of

dynamically, yet sensitively extracting and collecting pieces of materials from different target

locations.

The envisioned system should be able to perform forceful and elaborate tasks such as push, pull,

puncture,

scoop and even drill pieces attached to a surface within an unstructured environment in a safe yet

efficient

manner. This requires a bimanual setup capable of exploring both manipulation and fixture setups as well

as

coordinated and cooperative manipulation while ensuring the stability of the workpiece, robustness, and

real-time reactiveness.

The sequence of physical interactions with the environment, in turn, calls for efficient bimanual

reactive

planners that tightly interact with the control layers. Such planners should also consider whole-body

configurations and motions to intelligently use object-to-robot contacts, as well as

robot-to-environment

and object-to-environment contacts, to keep the robot and the workpiece stable under forceful operations

such as scooping and drilling.

Furthermore, the robot needs to explore the usage of different hand tools, which in turn requires

efficient

planning of different grasps and arm motions that should be coordinated with the predicted motions,

obstacles, and interaction forces during operation.

This provides the task-planning expertise to the robotic system, yet the robot should be able to learn

elaborate aspects of the interaction, i.e., low-level skills, which are intrinsically harder to model,

e.g.,

the forces and adaptive impedance during scooping, both through teleoperation and as well as kinesthetic

teaching.

To realize such a system, this research project will investigate sequential whole-body sequential

manipulation planning for physical interaction within an unstructured environment, possibly using hand

tools, to improve task-motion-planning efficiency while ensuring the stability of the workpiece and the

robotic system.

The development of such unique system will be a crucial building block to any future endeavours within

cooperative manipulation, and the usage of bimanual systems, for inspection, maintenance, and repair of

critical infrastructure.

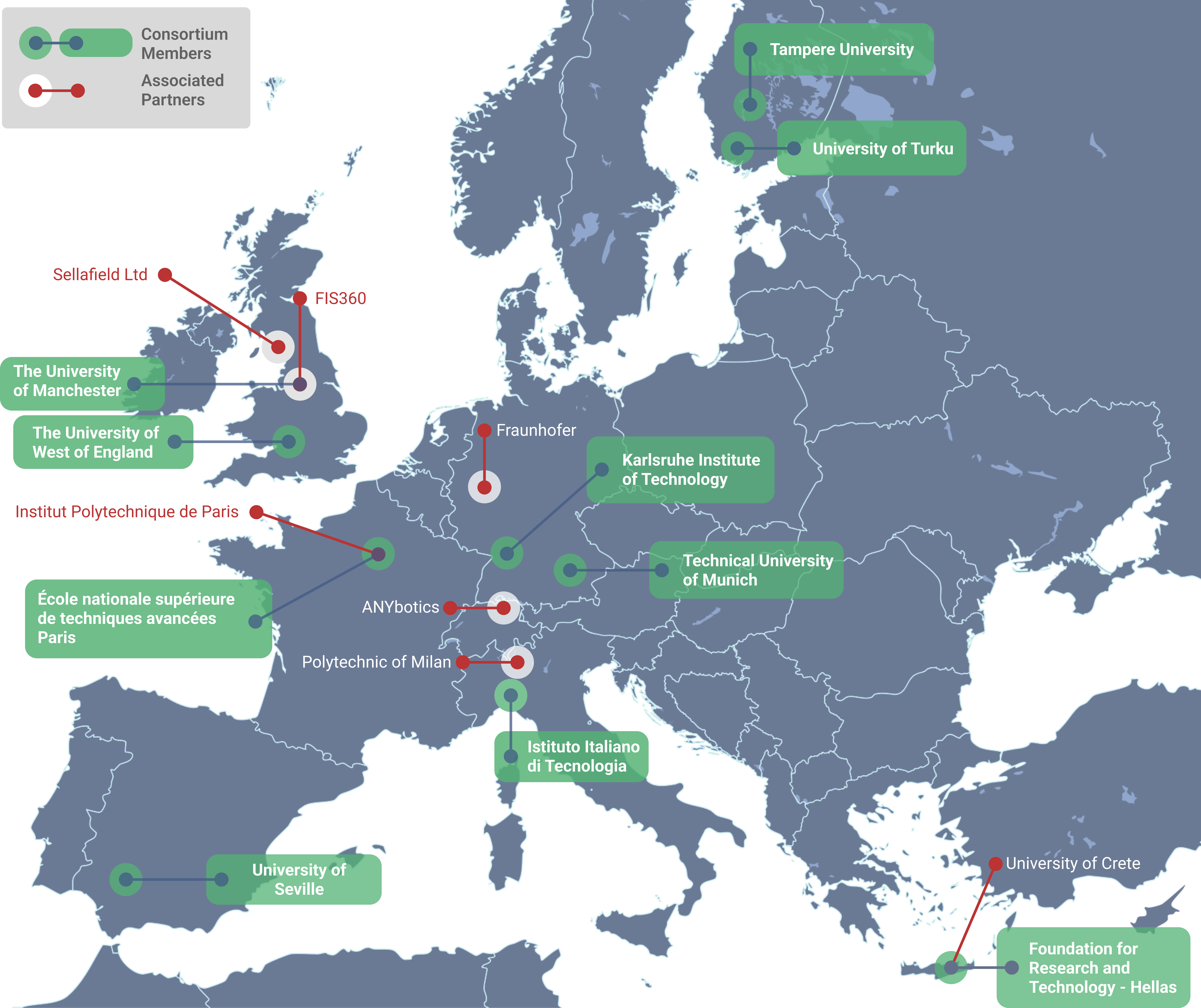

Host: Technical University of Munich, Germany

Located in the prosperous capital of Bavaria and home to over 39000 students, the Technical University

of

Munich (TUM) is one of the world’s top universities (top 4 European technical universities in The World

University Ranking, top 10 in Global University Employability Ranking, etc.). TUM benefits from the

healthy

mix of companies and startups of all sizes headquartered in the region and since 2006 more than 800

startups

have been developed by students and staff at the institution. Every year, TUM signs more than 1,000

research

agreements with partners in both the scientific and business communities.

The TUM Munich Institute of Robotics and Machine Intelligence (MIRMI) is a TUM's globally visible interdisciplinary research center for machine intelligence, which is the integration of robotics, artificial intelligence, and perception. The MIRMI is a vibrant environment with multiple locations at the heart of Munich, including the Siemens Technology Center, home of the Neuroprosthetics and Human-Centered Robotics Lab, which Prof Cristina Piazza heads. TUM is collaborating with the Cyber-physical Health and Assistive Robotics Technologies (CHART) group at the University of Nottingham with Dr Luis Figueredo.

Supervisor(s): Prof. Cristina Piazza, Luis Figueredo

Essential Skills & Qualifications

- An MSc qualification in Robotics, Computer Science, Electrical & Electronic

Engineering, Mechatronic & Mechanical Engineering, or other equivalent

discipline

- An MSc dissertation on a robotic topic

- Excellent programming skills

- Evidence of practical experimental work in robotics

- Evidence of strong mathematical background

- Evidence of solid knowledge in manipulator modelling and control

Desirable Skills & Qualifications

- Evidence of using ROS/ROS2

- Knowledge/experience relating to one or multiple of the following topics: motion

planning, force/impedance control, robot learning, bimanual/multi-arm

manipulation.